NOAA: AI Arctic Boat Navigation

Quick links: presentation poster with the highlights, oral presentation slides with more details, code repository (more documentation coming soon), details on my conference presentations at the 2023 American Meteorological Society conference.

The National Oceanic and Atmospheric Administration (NOAA) sponsors missions that send uncrewed surface vehicles (USVs) to conduct science in the Arctic Ocean. USVs — boats with no humans onboard — are a promising new technology because they can be cheaper, more durable, and lower emissions than crewed missions. One important area of study seeks to examine the interactions between air, water, and sea ice to better understand the role these interactions play in climate change.

USVs can be piloted from land via satellite link, but bandwidth limitation are often such that pilots must set waypoints that the USV follows automatically rather than steering the craft through its every move. In 2019, a USV on a NOAA mission got stuck in the ice it was studying and suffered damage to its rudder. This underscores the fact that to send USVs close to sea ice without constant manual guidance, we need to design an algorithm that can detect sea ice using the sensors onboard the USV and let the USV navigate near to it without running into it.

Previous attempts to find a usable relationship between water temperature and ice presence or between water salinity and ice presence were unsuccessful, so in this project, working as a paid machine learning intern at NOAA’s Pacific Marine Environmental Laboratory under the Ernest F. Hollings Undergraduate Scholarship in the summer of 2022, I sought to develop an algorithm allowing USVs to navigate around sea ice using onboard cameras.

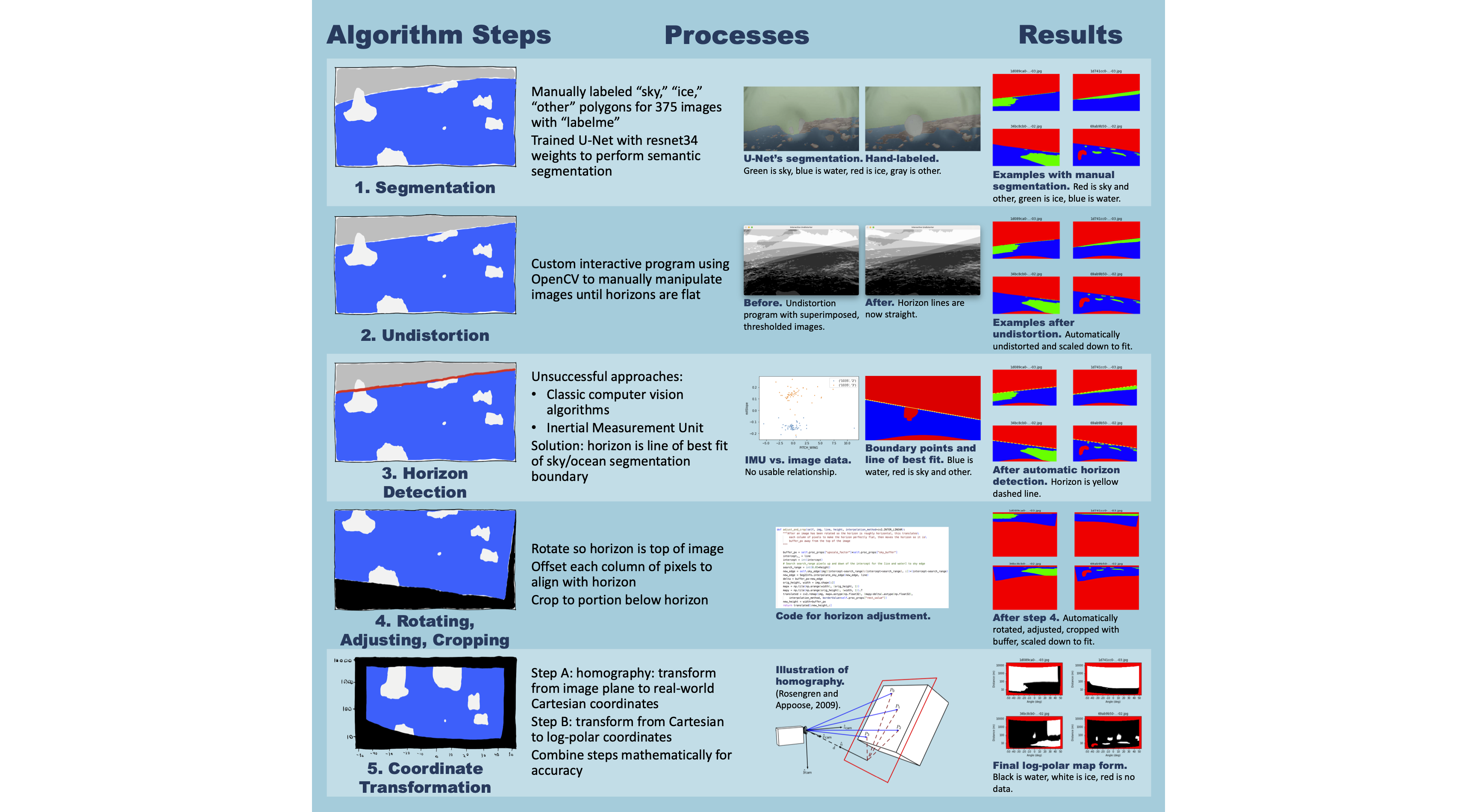

Ultimately, the algorithm I came up with has five steps:

- Train a state-of-the-art neural network architecture called a U-Net to classify each pixel of an image as sky, water, ice, or other — this task is called “semantic segmentation”

- Remove lens distortion from the image

- Detect the horizon in the image

- Transform the image such that the horizon forms its top edge, removing the sky portion

- Change coordinate systems so the result is a map in polar coordinates of where the ice is in the portion of ocean visible in the image

Step 1, the machine learning step, achieves a Dice score of 0.825 on a scale of 0 to 1, and parameters like the detected horizon location are similar when using automatically segmented images as when using manually labeled images. More robust testing needs to be done to assess the quantitative accuracy of the final maps the algorithm produces, but these maps are qualitatively informative. Please see my poster and oral presentation slides for more technical information.

In January 2023, I presented my work at the American Meteorological Society meeting, both through an oral presentation at the 22nd Conference on Artificial Intelligence for Environmental Science’s session on Artificial Intelligence for Feature Detection and through a poster at the 22nd Annual Student Conference’s session on AI and Machine Learning. More information on those presentations can be found here.